Why I'm boosting more on Mastodon

You know what I envied of those influencers with millions of followers? The magic of tweeting a question and getting valuable answers and advice from knowledgeable people.

I'm no celebrity, but on Mastodon I'm getting the same experience with orders of magnitude fewer followers.

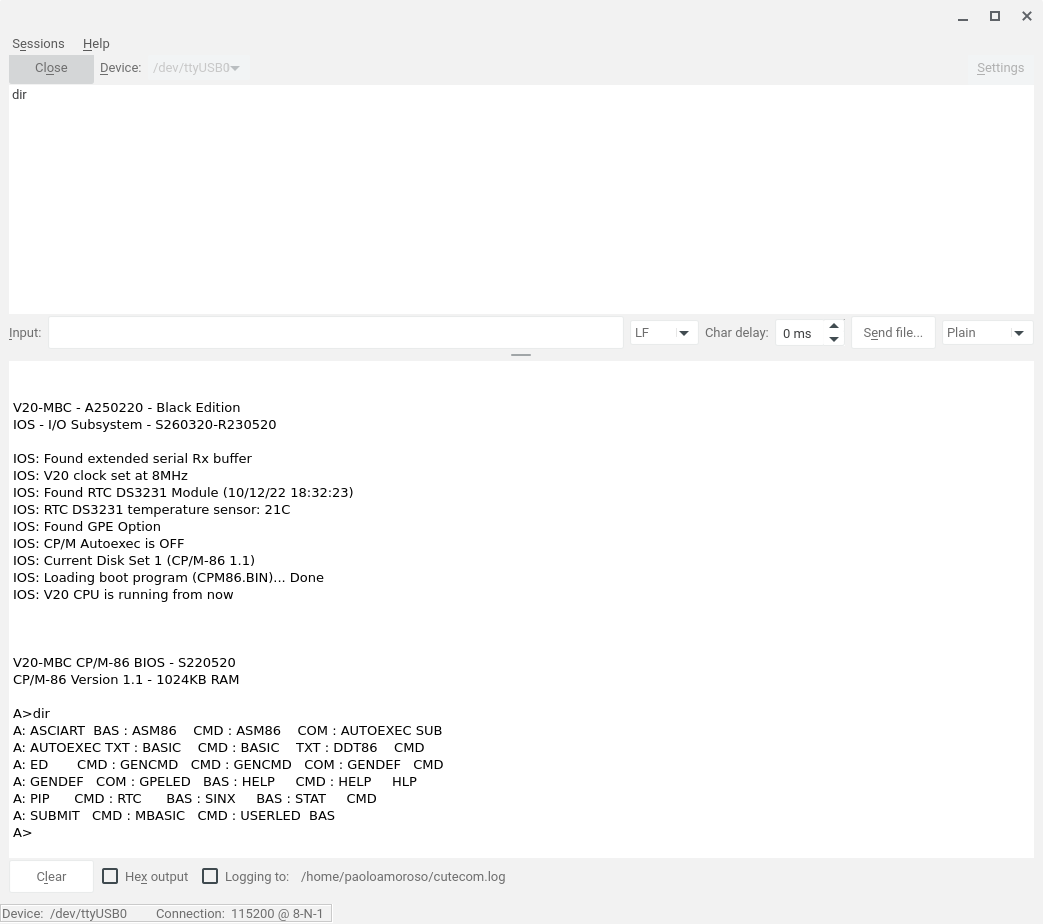

As a retrocomputing enthusiast, it's a lot of fun to explore and program my V20-MBC homebrew Nec V20 computer. But CP/M-86, one of the operating systems the V20-MBC runs, was eclipsed by MS-DOS back in the day, leaving little surviving documentation and literature. Especially about CP/M-86 development.

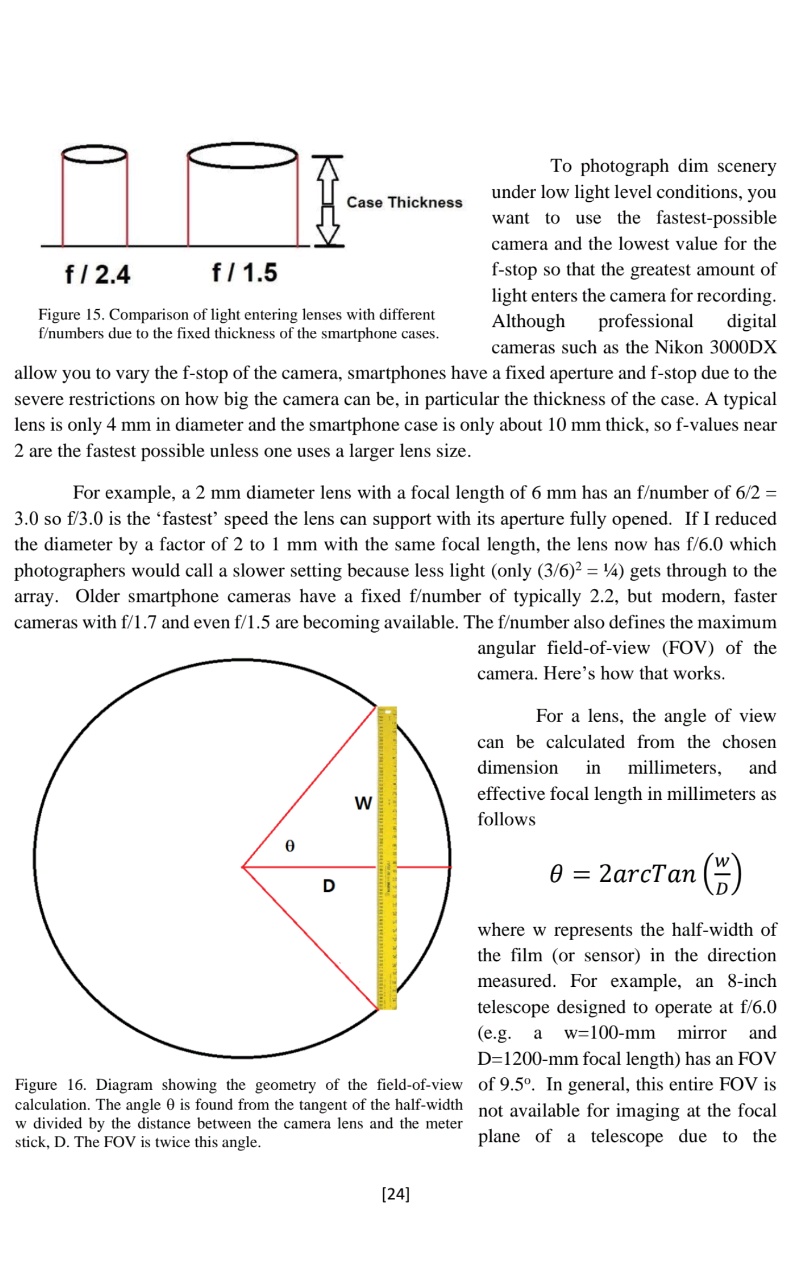

So the Open Library listing of the book CP/M-86 Assembly Language Programming by Jon Lindsay (Brady Communications Co., 1986) caught my attention. Sadly, the text isn't available. I decided to try to track down the book, starting by asking for help on comp.os.cpm.

Without expecting much, I also posted a toot to my Mastodon account in case anyone had useful clues on the book. What happened next blew my mind.

Within a day the post was boosted over a hundred times, got some fifty stars, and received dozens of valuable replies. The replies contributed promising leads, tagged others who might know something, suggested workarounds or other lines of inquiry, and started a few interesting side conversations. Everyone went out of their way to assist.

I was speechless, breathless. Literally. I didn't even know how to adequately thank the many who chimed in.

This isn't possible on Twitter, where the algorithms boost celebrities and influencers and bury everyone else.

You may think this overwhelming support has something to do with my 1.1K Mastodon followers. But I've been having a similar experience since joining Mastodon ten months ago. I'd say the critical mass is somewhere between a few dozen and a couple hundred followers.

I want to give back. I want to give others the opportunity of a similar positive experience, which is within reach of everyone in the Fediverse. Boosts proved crucial to amplify my quest for help, so I'm boosting more toots for a chance to reach someone who may be interested or willing to help.

People are the ultimate algorithm.

#fediverse #retrocomputing #books

Discuss... Email | Reply @amoroso@oldbytes.space